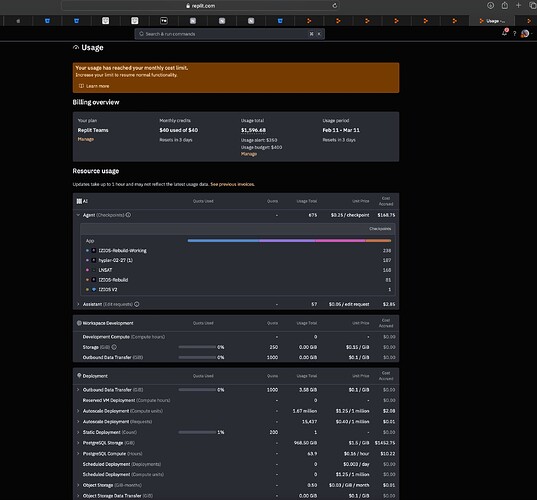

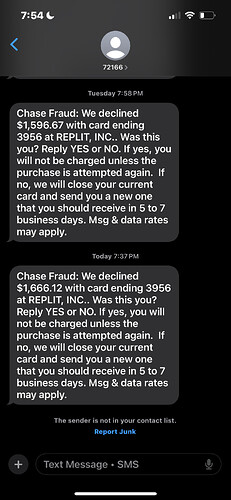

After using the agent 2, I had great success but I have no idea how I accrued 900gb of PostgreSQL Storage, I am not storing images, I am pulling all data from 3rd party servers simply the data from our vendor. I am having them lookin into what the hell could have taken up so much data but it was going for days (which we account for I set a $400 limit on checkpoints) and I don’t know why the limit does not apply to other parts of the system, if it triggered the limit I would have noticed something is wrong. I am posting this so everyone is aware this might be a bug with agent 2 and if it is not it can go haywire creating a lot of data and not cleaning up. There has to be a better way to manage this because while we are building it may or may not let us know and I get a $1500 attempted charge to my card and now I’m stuck because I can’t use the system until we can settle this issue with replit.

This is nuts. Update from Replit?

I’m waiting to hear back, they escalated it and are looking into it.

Hey, yes this is escalated and we’re waiting on Neon now to confirm what happened, will post an update once they get back!

ok but I think there is a more concerning thing, that the limits do not work to warn you about the database then, that seems to be a big problem if there is an issue with how the agent decides to work on more complex problems especially when handling data. I have been hitting it hard for a few months now and it seemed to charge me accordingly but all of a sudden there were huge charges on database storage and It did not warn me at the $350 mark or I would have for sure adjusted or tried to figure out what was going on in the background. This is a big problem. I will wait to hear back.

Hi there! Regarding the refund, could you email kody.low@repl.it and we’ll follow up with the next steps?

Regarding the issue you raised about the limits not working to warn you on time, I’ll dig deeper to understand what happened there. It’s definitely a key piece that should work reliably. There is also some work we need to do to make the db cost breakdown clearer to our users. I’m talking to Neon about this as well. We are rolling out a change to enable users to adjust the retention period on their databases to manage the costs better tomorrow. That should also give some recourse to help with this type of incident.

I appreciate you guys looking into this, I want to keep using the system we have some cool stuff we are working on but its in pause obviously until we can settle this. Thanks I will email Kody.

What caused the database to balloon to that size?

Also interested to learn more about the retention period for databases feature.

No idea - it might be the Neon database rollbacks, I cut them down to every 12 hours from 7 days which was default, but it still shows a huge amount of data and now I have a $1666 balance again. I’m locked out. Anyone else having anything similar? I think agent 2 is creating too many rollback points, sometimes agent will go for 4-5 minutes straight. I will probably need to go back to agent 1

@ertan-replit do we have the option to use our own AWS instead of Neon?

I did a post on the dB charges before. Neon charges for all the amount of data written and NOT for the dB size.

So if you have a dB of 1gb size that you wipe and reload 10 times you’ll be charged for 10gb even though you never went above 1gb size.

I personally have moved from replit DBs and just use a Amazon postgres. It’s much cheaper and you don’t get this funny charging

So this is not ready for production then. I guess I need to move off of replit then. This is frustrating because our database is around 800mb and during testing. Either way since the invoice was cleared but I guess neon still has the data, I don’t know how to reset this or remove the older copies if that’s the case. I may have to get the data off the platform, kind of defeats the purpose of using replit though, I like the simplicity but this will just make it impossible to predict costs.

There is nothing you can do. Delete the database and hope they reset it. It’s the exact reason I don’t use it heir dB at all. You can’t control costs at all and there is no transparency

Okay update here from Neon and had a sec to look deeper into this now that I’m back at my keyboard.

Big thing is that Neon’s postgres maintains history (was default 7 days, just shipped feature on replit to configure that so you can do less) and your storage counts as the accumulation of that history while it’s saved.

This is critically important for Agent so that we can roll back the database to as it was before an agent code change, without it we can’t do rollbacks tied to database versioning, and doesn’t affect most users. In this case, whatever you were doing did long running, continuous database manipulations that accumulated gigabytes worth of history.

If you don’t need the history feature or the costs associated with maintaining it are excessive, you can now (with the feature shipped yesterday) cut the time down to limit that history part vs your current database.

From Neon:

"Let me explain the discrepancy you’re seeing and provide some insights into what might be causing this issue.The difference between the reported storage (1166GB) and the main branch data size (632.02MB) is due to how Neon calculates storage usage. The total storage includes both the actual data size and the history of changes made to your data over time. This history, stored as Write-Ahead Log (WAL) records, enables features like point-in-time restore and time travel.Several factors could contribute to the sudden increase in storage usage:

- History Retention: The default retention period is 7 days. If your database undergoes frequent modifications, this can result in a significant amount of WAL data.

- Frequent Data Modifications: Operations like updates, inserts, or deletes generate more WAL records, increasing overall storage size.

Heres a quick explanation of synthetic storage:In Neon’s architecture, logical size refers to the actual amount of data stored in a branch, encompassing all databases within that branch. This metric reflects the true size of your data without considering additional storage overhead.The synthetic size of your project includes both the database size and the history of changes, stored as Write-Ahead Logs (WAL). Each data write operation generates WAL records, so more frequent or larger writes will increase the synthetic size, thus using additional storage. WAL records are retained for a set period to support features like Point-in-Time Recovery and Time Travel. Once WAL records exceed this retention period, they are removed from storage and no longer count toward your project’s storage usage. This combined total database size and retained history forms the synthetic size, which is the basis for storage billing.In order to avoid increasing your synthetic storage recommend lowering their history retention. Which is currently set to 604800 seconds (7 days)."

That’s all fine and dandy, but the real problem was his max account spend was not respected - which needs to be fixed for us all, as this is a real risk for every one of us.

I guess I have to figure out how to move the data off replit. I actually don’t have a problem with this but it’s a big problem if you don’t know up front why it’s happening

yeah you’re telling me. They voided the invoice which was nice of them but the data is still retained on neon so as soon as I go to start a new chat it blew my usage for the month after charging me $40. I’m $1600 locked out again. I think I’ll have to move the code out and the data and try something else. I like the agent but its way too unpredictable.

Why does this whole thing feel really inefficient and like ‘magic calculations’ instead of true system usage?

I deleted my account and moved on with my life. Sorry guys I don’t have time for this, its not ready for production, obviously, companies cannot really rely on this for anything critical, its a toy to make toy software that is disposable (I believe I even heard Matt Palmer mention this on Linked in, that they want to be able to offer the ability to make disposable software. Well they accomplished it, you build build build and then dump it in the trash with no real results because its unusable.